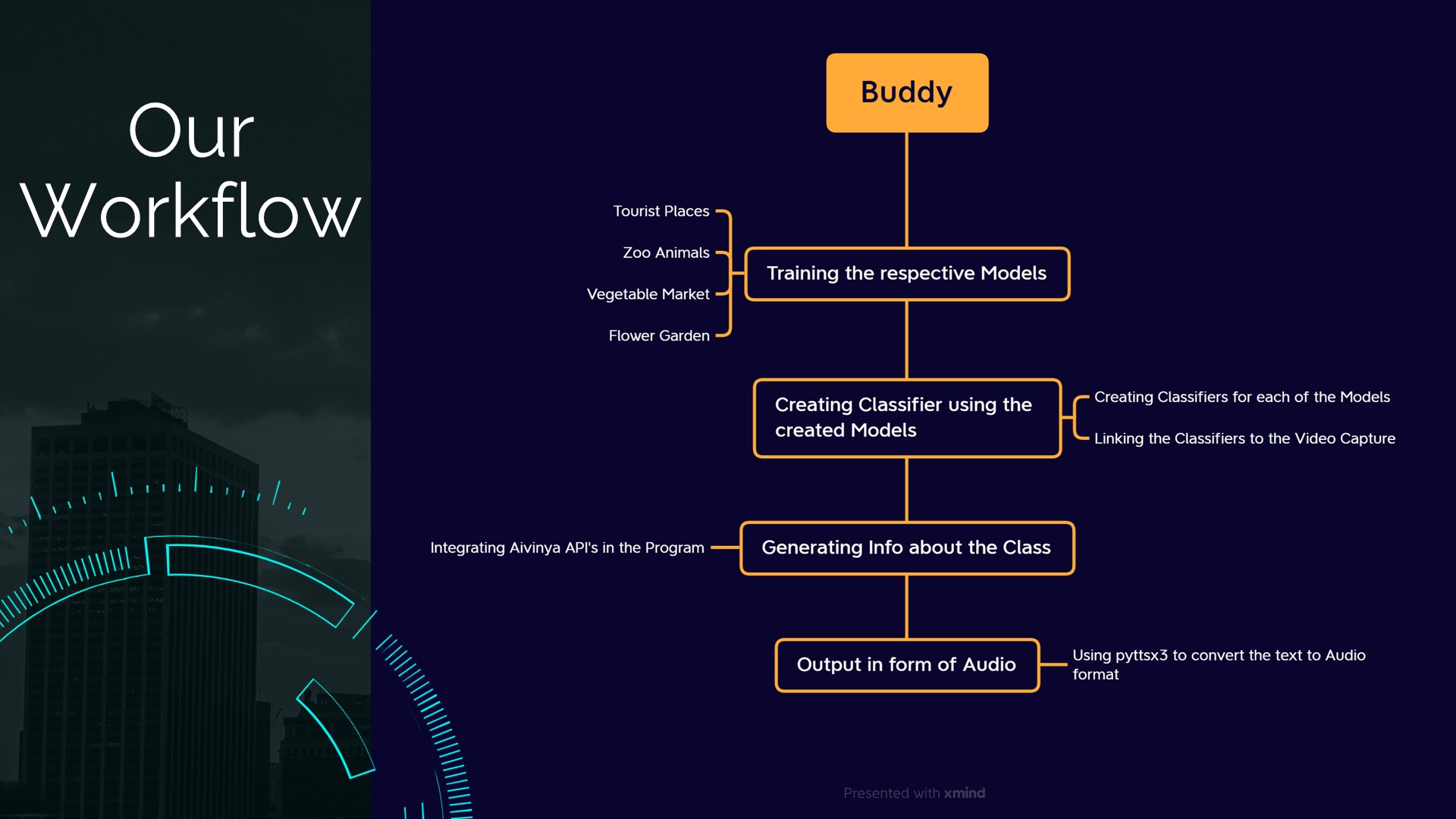

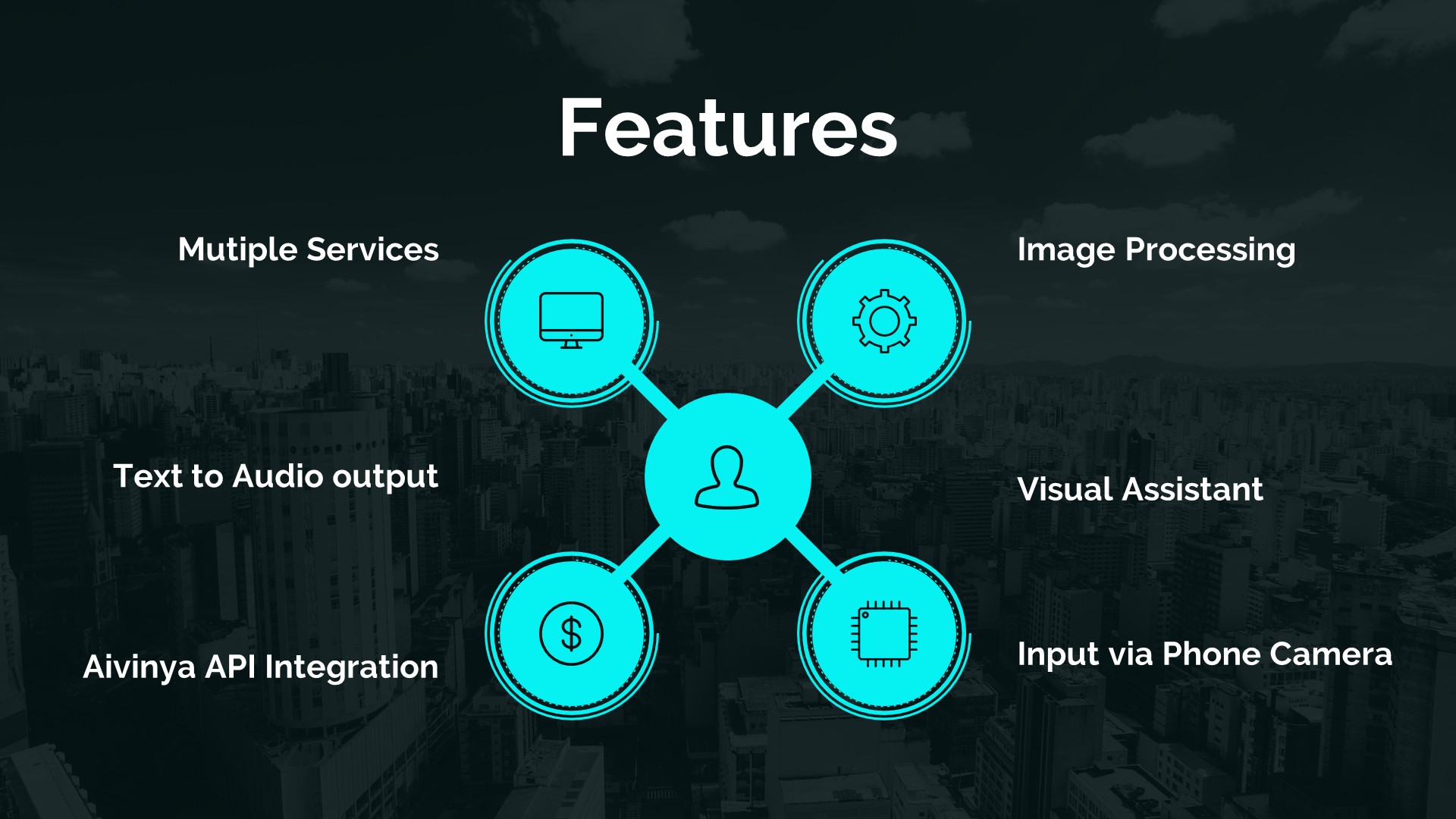

Buddy uses a combination of deep neural network (DNN), computer vision, and natural language processing to identify and describe different objects. The DNN is responsible for processing images and identifying various objects, while the computer vision system generates a detailed description of each object. The natural language processing system converts the description into audio using text-to-speech technology, allowing users to hear about the object in a clear and concise manner.

In addition to providing information through images, Buddy can also recognize text and speech input. This means that users can ask Buddy questions and receive a response in a natural language format. The use of natural language processing allows Buddy to understand the intent behind each question, ensuring that users receive relevant information.

Working Video

Future Aspects

As AI technology continues to evolve, there are numerous possibilities for the future development of Buddy. One of the most exciting aspects of AI technology is the integration of computer speech recognition, which could allow users to interact with Buddy in a more natural way. This would involve Buddy being able to understand and respond to spoken commands, allowing users to have a more hands-free experience.

Predicting the Future isn't magic. Its Artificial Intelligence.by: Dave Waters

Another aspect that could be explored is the incorporation of augmented reality technology. Augmented reality technology could allow users to interact with objects in a virtual environment, providing an immersive experience that goes beyond the physical world. For example, users could point their phone camera at a tourist attraction, and Buddy could generate an augmented reality experience that provides additional information about the attraction.

Wearable technology is also a potential avenue for the future development of Buddy. By integrating Buddy into wearable devices, users could have access to an AI assistant that they can use on-the-go. This would allow them to quickly and easily access information about different objects without having to pull out their phone.

Summary

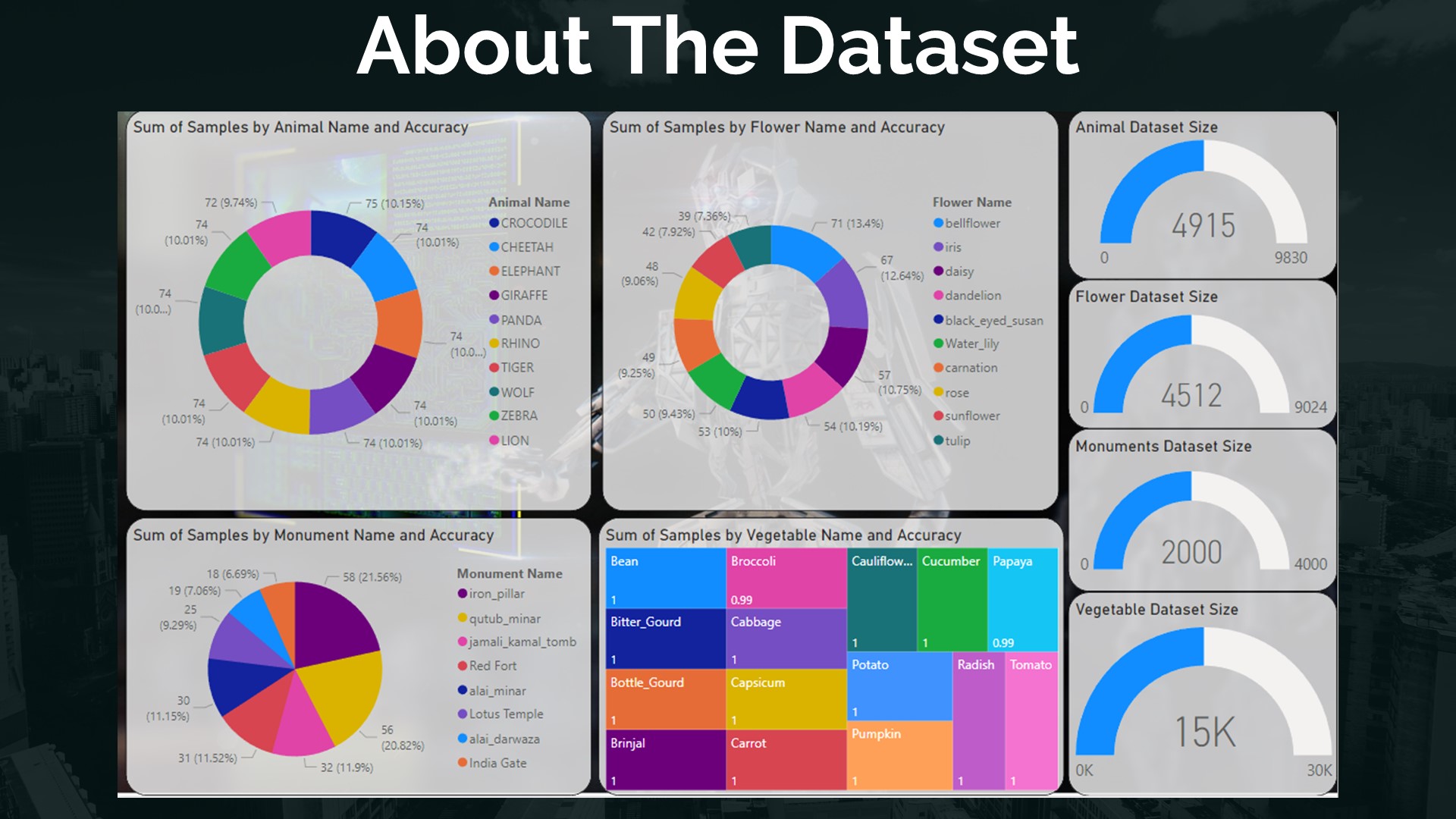

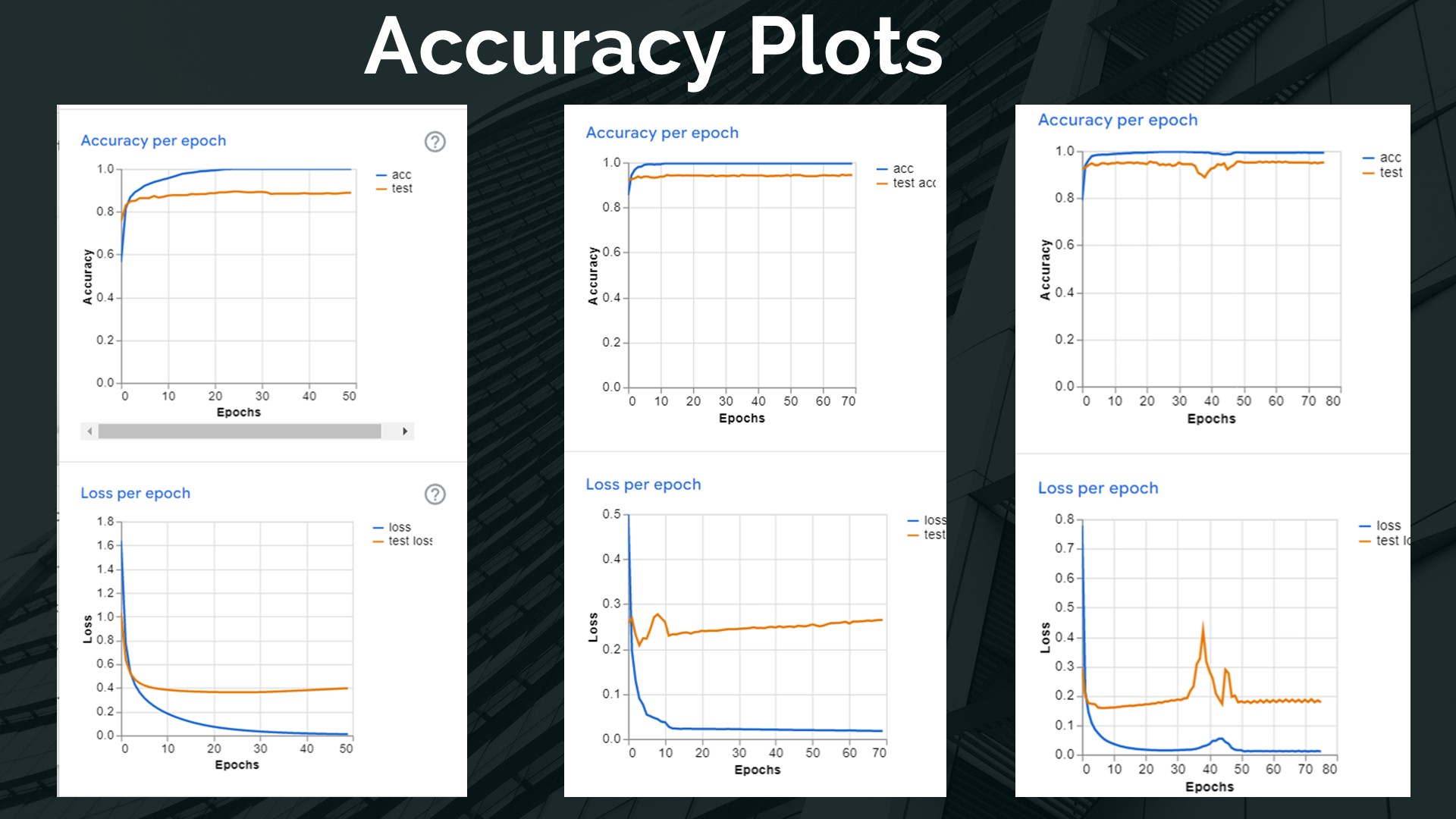

Buddy is an innovative AI assistant that provides users with a wealth of information on various objects. It is a versatile tool that can be used to explore tourist attractions, learn about different animals, vegetables, and flowers, among other things. By using computer vision and natural language processing, Buddy can accurately identify and describe objects in a clear and concise manner.

The integration of Aivinya API and text-to-speech technology allows for detailed descriptions to be converted into audio, making it easier for users to learn about different objects. With the potential for further technological advancements in the future, Buddy has the potential to become an even more valuable resource for users.

Project Github Repository