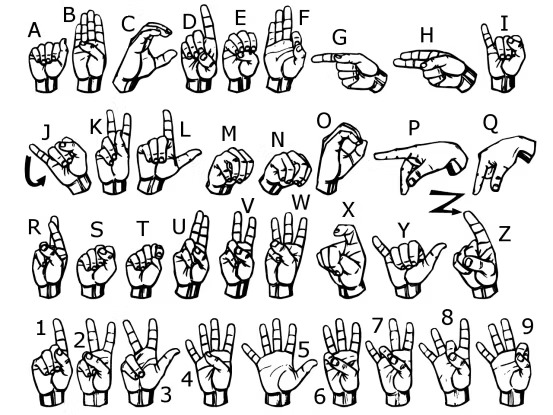

The ASL recognition system is implemented using OpenCV, a hand tracking module, and a classification module. The system first captures real-time video input from a camera using cv2.VideoCapture(). It then uses HandDetector from the cvzone library to detect any hand(s) present in the video feed.

Once a hand is detected, the system crops the video feed around the hand region using the bbox (bounding box) coordinates obtained from the hand detection. The cropped region is then resized to a fixed size of 300x300 pixels and stored in a NumPy array. This step ensures that the input to the classification model is consistent in terms of size and aspect ratio, and reduces the effect of background noise and clutter.

The Classifier module from cvzone is used for classification, and is initialized with a pre-trained Keras model (keras_model.h5) and a label file (labels.txt). The model is trained to classify 7 different signs for the project.

Working Video

For each frame, the system passes the resized hand image to the classification model using getPrediction() function of the Classifier module, which returns the predicted sign and the corresponding index. The predicted sign is then displayed on the screen along with a bounding box around the hand region using cv2.putText() and cv2.rectangle() functions.

The system also includes a data collection script (data_collection.py) that allows users to capture and save images of their hand signs for later training and testing of the classification model. The script captures images from the camera and stores them in a specified folder with a unique filename based on the timestamp.

Language is not just about sounds, it is about meaning and understanding. ASL recognition systems have the power to bridge the gap between the Deaf and hearing worlds, allowing for a more inclusive and accessible society.by: Unknow

Future Aspects

Integration with Wearable Technology: Integration of ASL recognition systems with wearable technology such as smart glasses or smartwatches can allow for a more seamless experience and easier communication.

Increased Vocabulary: ASL recognition systems can be expanded to include a larger vocabulary, including specialized signs and regional dialects.

Access to Education: ASL recognition systems can be used in education settings to facilitate learning and communication for deaf and hard-of-hearing students.

Mobile Applications: ASL recognition systems can be integrated into mobile applications, allowing users to learn ASL and communicate with deaf individuals on-the-go.

Summary

Overall, the ASL recognition system implementation using OpenCV, a hand tracking module, and a classification module provides a simple and effective way to recognize hand signs in real-time video feeds. However, further optimizations and improvements can be made to the system, such as using more advanced deep learning models for classification, improving the image pre-processing pipeline, and addressing issues with occlusion or complex sign gestures.

Project Github Repository